dummy slide

Starting 2000 Cloud Servers for

Benchmarking LLM Inference Speed

Gergely Daróczi

Apr 24, 2025

Slides: sparecores.com/talks

Press Space or click the green arrow icons to navigate the slides ->

SYN [SEQ=0] Hi, we are Spare Cores.

SYN-ACK [SEQ=1] OK .. So what?

ACK [SEQ=2] We bring clarity to cloud server options! I’ll tell you more!

🤝 🍻

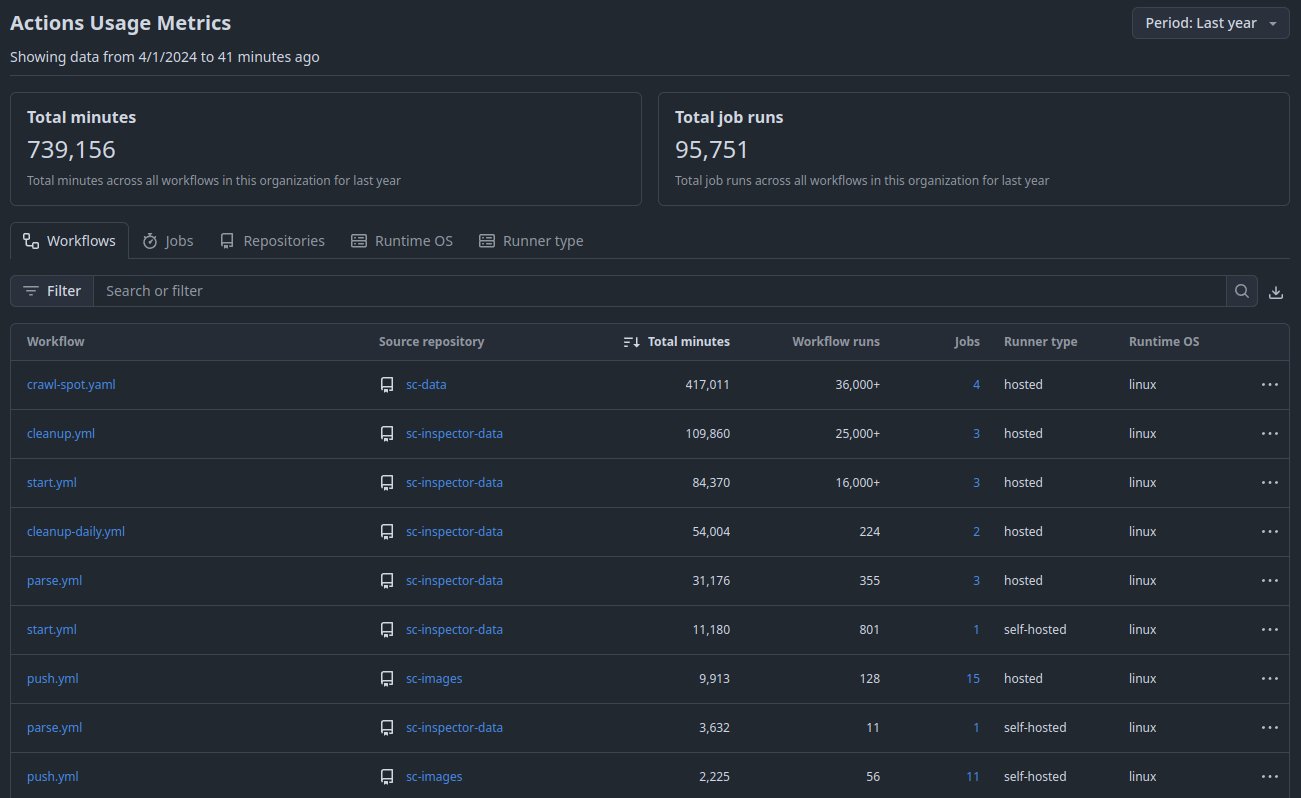

>>> from sparecores import intro

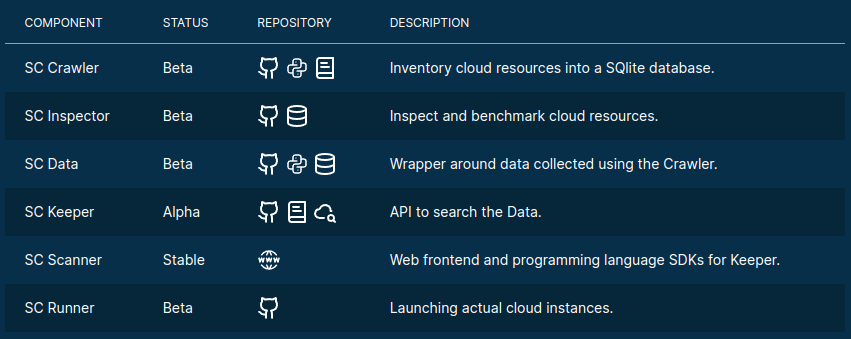

- Open-source tools, database schemas and documentation to inspect and inventory cloud vendors and their compute resource offerings.

- Managed infrastructure, databases, APIs, SDKs, and web applications to make these data sources publicly accessible.

- Helpers to start and manage instances in your own environment.

- Helpers to monitor resource usage at the system and batch task level, recommend cloud server options for future runs.

- SaaS to run containers in a managed environment without direct vendor engagement.

>>> from sparecores import intro

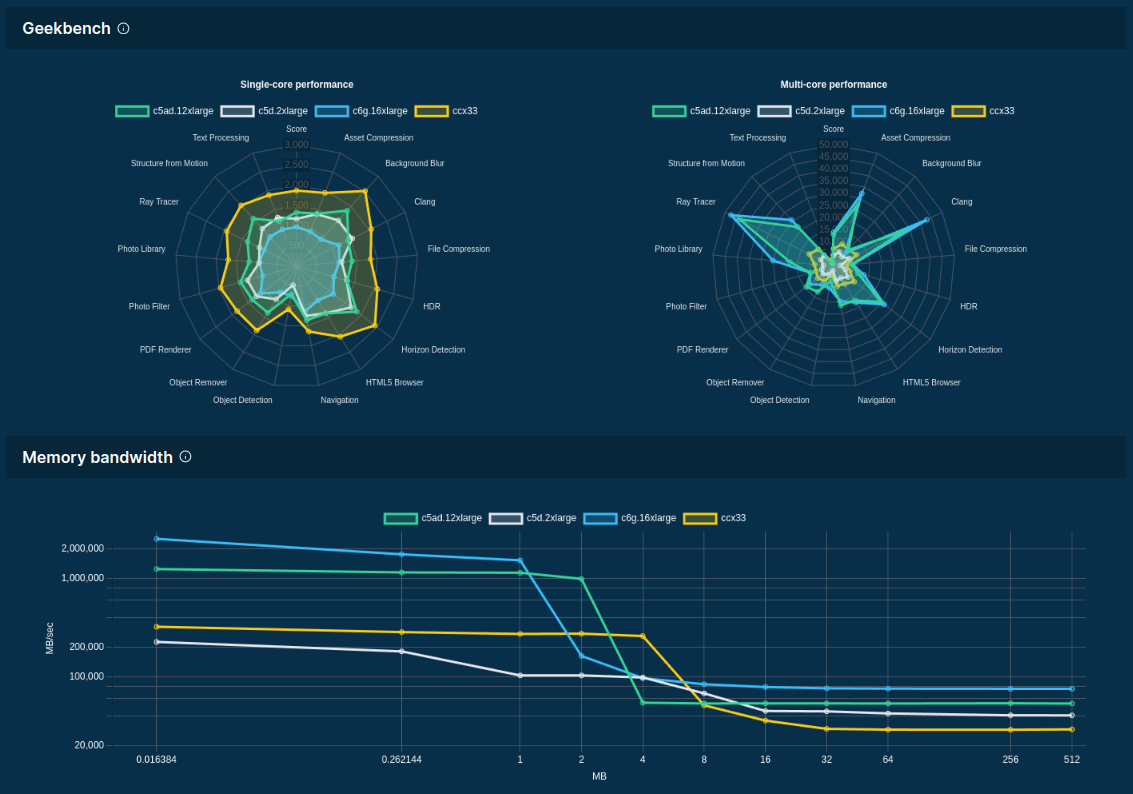

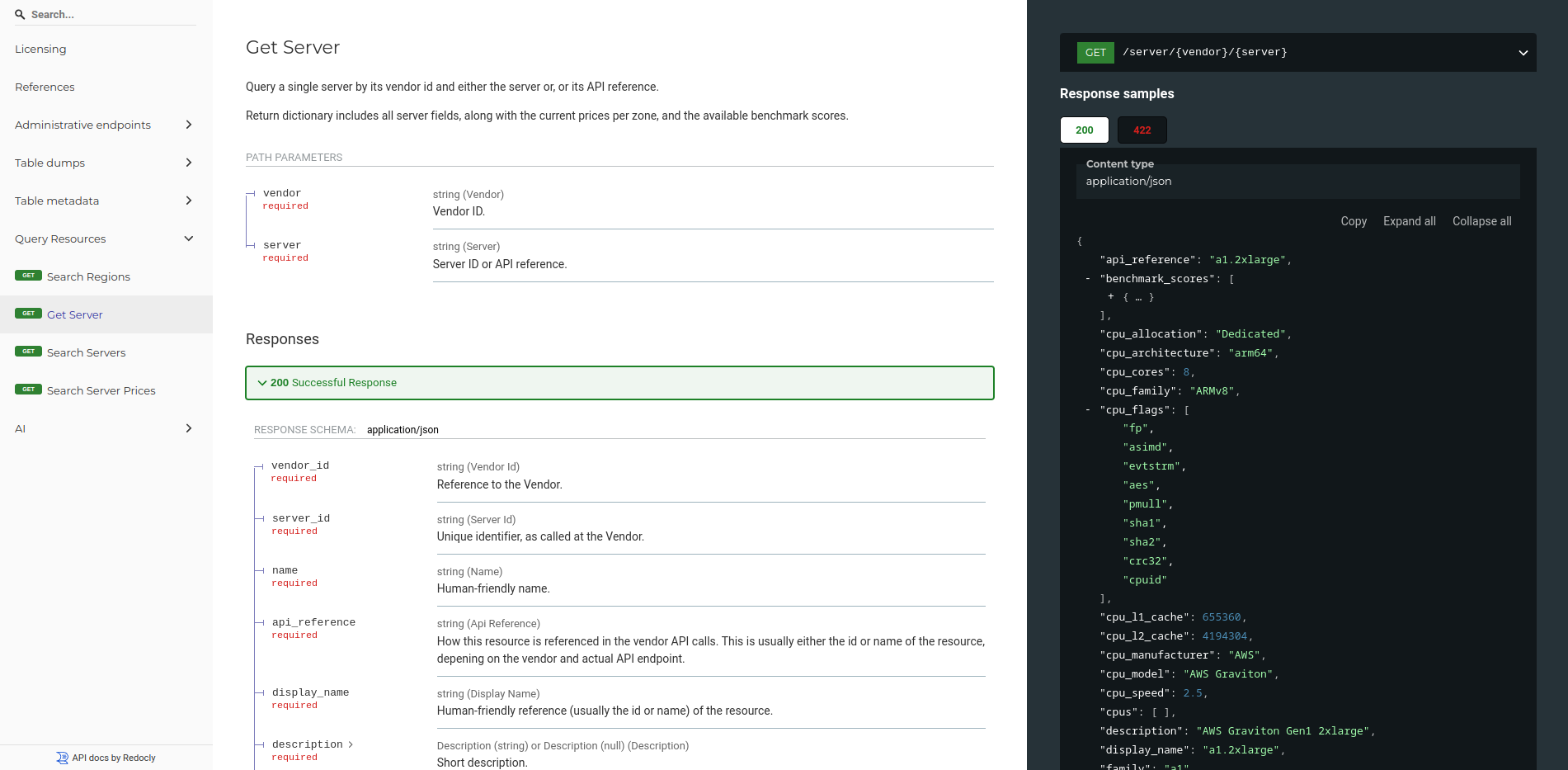

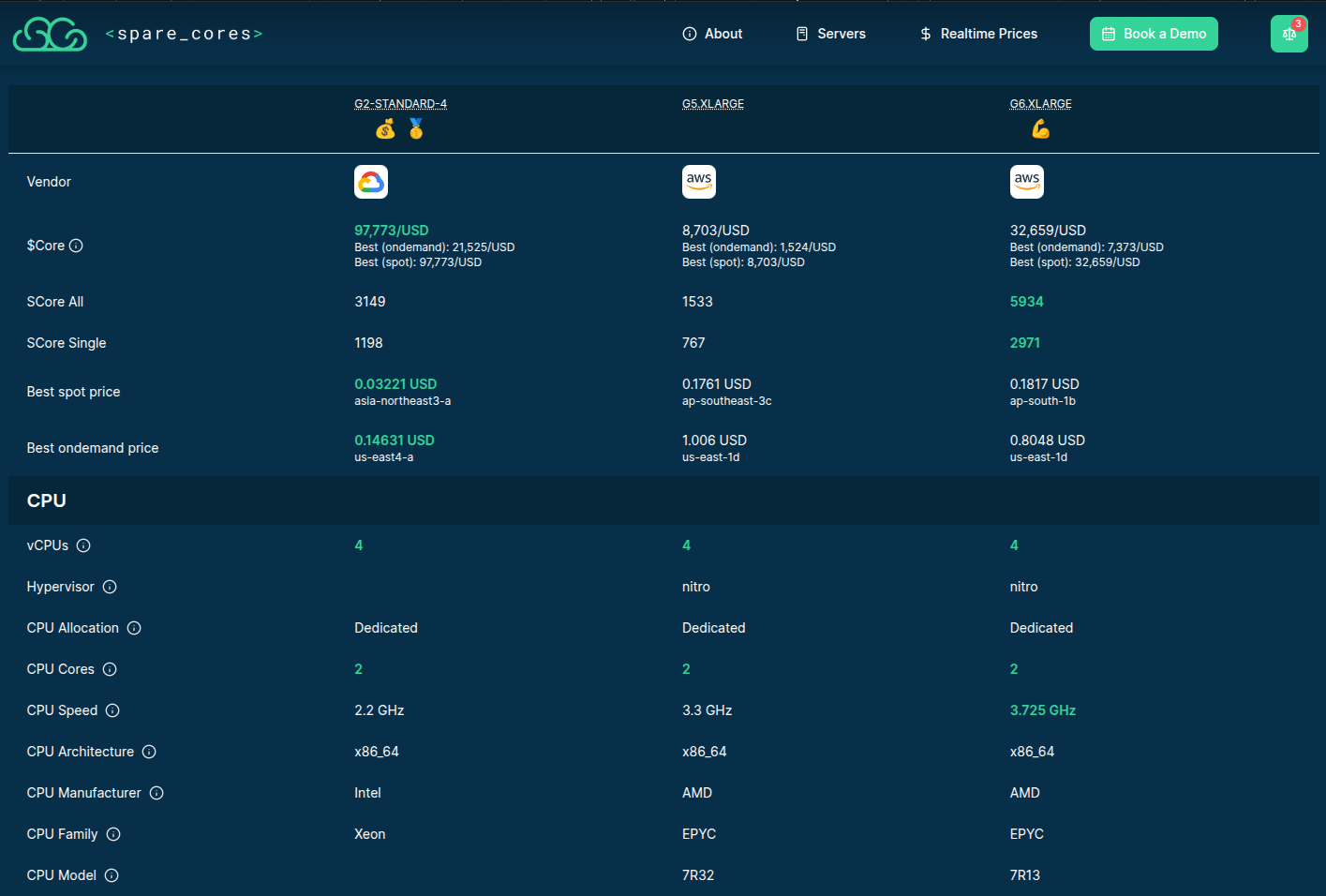

Source: sparecores.com

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from sparecores import intro

>>> from rich import print as pp

>>> from sc_crawler.tables import Server

>>> from sqlmodel import create_engine, Session, select

>>> engine = create_engine("sqlite:///sc-data-all.db")

>>> session = Session(engine)

>>> server = session.exec(select(Server).where(Server.server_id == 'g4dn.xlarge')).one()

>>> pp(server)

Server(

server_id='g4dn.xlarge',

vendor_id='aws',

display_name='g4dn.xlarge',

api_reference='g4dn.xlarge',

name='g4dn.xlarge',

family='g4dn',

description='Graphics intensive [Instance store volumes] [Network and EBS optimized] Gen4 xlarge',

status=<Status.ACTIVE: 'active'>,

observed_at=datetime.datetime(2024, 6, 6, 10, 18, 4, 127254),

hypervisor='nitro',

vcpus=4,

cpu_cores=2,

cpu_allocation=<CpuAllocation.DEDICATED: 'Dedicated'>,

cpu_manufacturer='Intel',

cpu_family='Xeon',

cpu_model='8259CL',

cpu_architecture=<CpuArchitecture.X86_64: 'x86_64'>,

cpu_speed=3.5,

cpu_l1_cache=None,

cpu_l2_cache=None,

cpu_l3_cache=None,

cpu_flags=[],

memory_amount=16384,

memory_generation=<DdrGeneration.DDR4: 'DDR4'>,

memory_speed=3200,

memory_ecc=None,

gpu_count=1,

gpu_memory_min=16384,

gpu_memory_total=16384,

gpu_manufacturer='Nvidia',

gpu_family='Turing',

gpu_model='Tesla T4',

gpus=[

{

'manufacturer': 'Nvidia',

'family': 'Turing',

'model': 'Tesla T4',

'memory': 15360,

'firmware_version': '535.171.04',

'bios_version': '90.04.96.00.A0',

'graphics_clock': 1590,

'sm_clock': 1590,

'mem_clock': 5001,

'video_clock': 1470

}

],

storage_size=125,

storage_type=<StorageType.NVME_SSD: 'nvme ssd'>,

storages=[{'size': 125, 'storage_type': 'nvme ssd'}],

network_speed=5.0,

inbound_traffic=0.0,

outbound_traffic=0.0,

ipv4=0,

)>>> from sparecores import intro

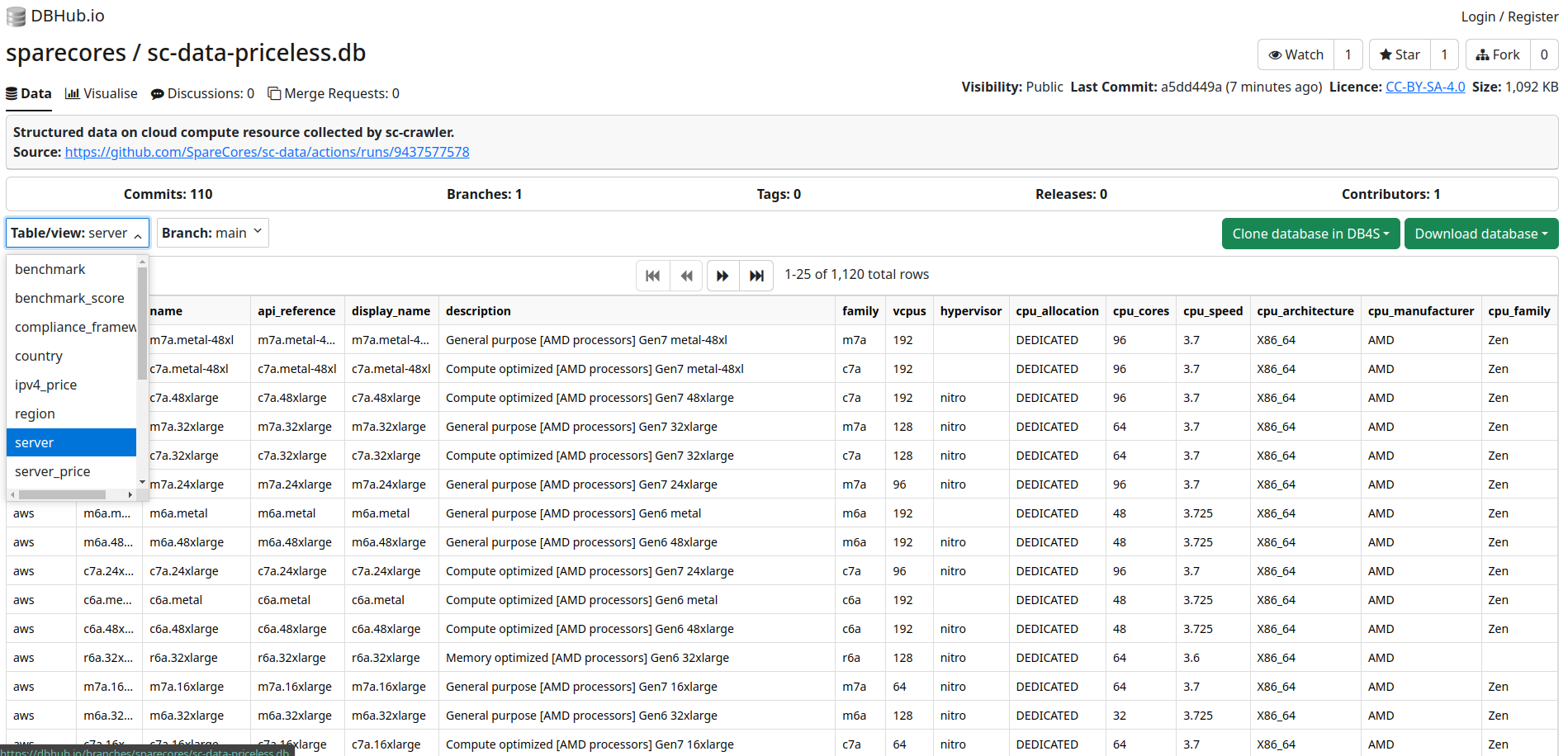

Source: dbhub.io/sparecores

>>> sparecores.__dir__()

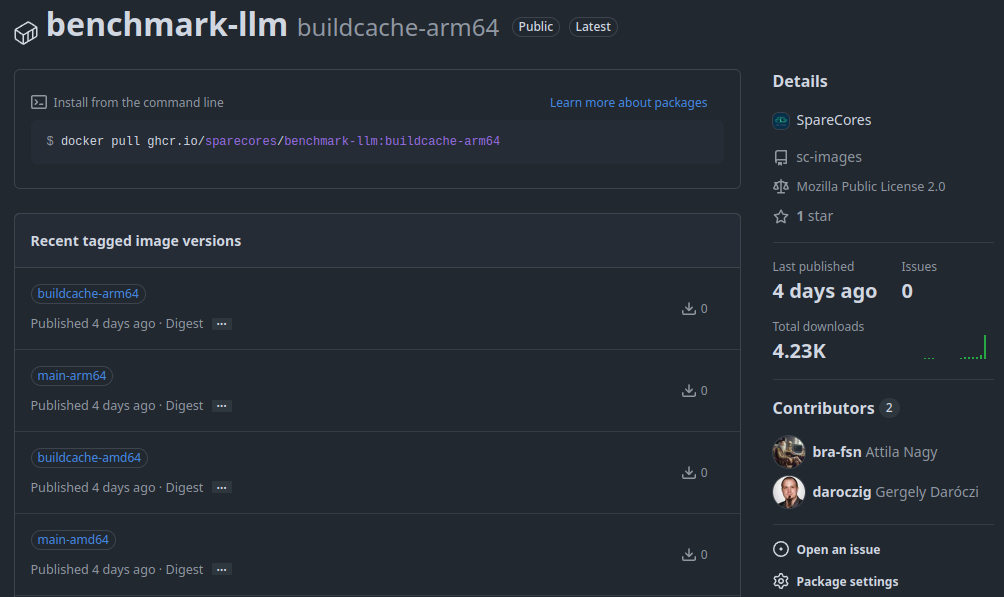

>>> from sc_inspector import llm

>>> from sc_inspector import llm

>>> from sc_inspector import llm

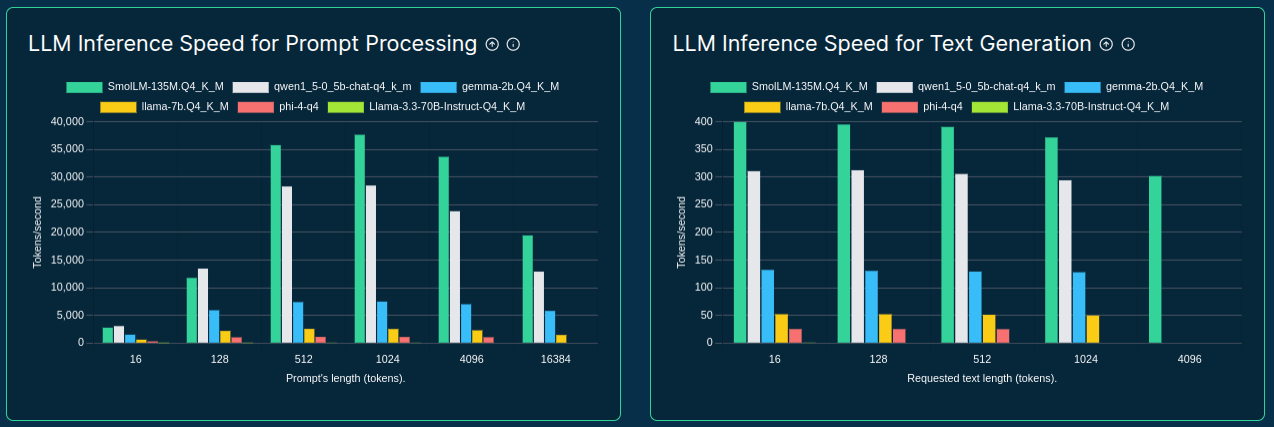

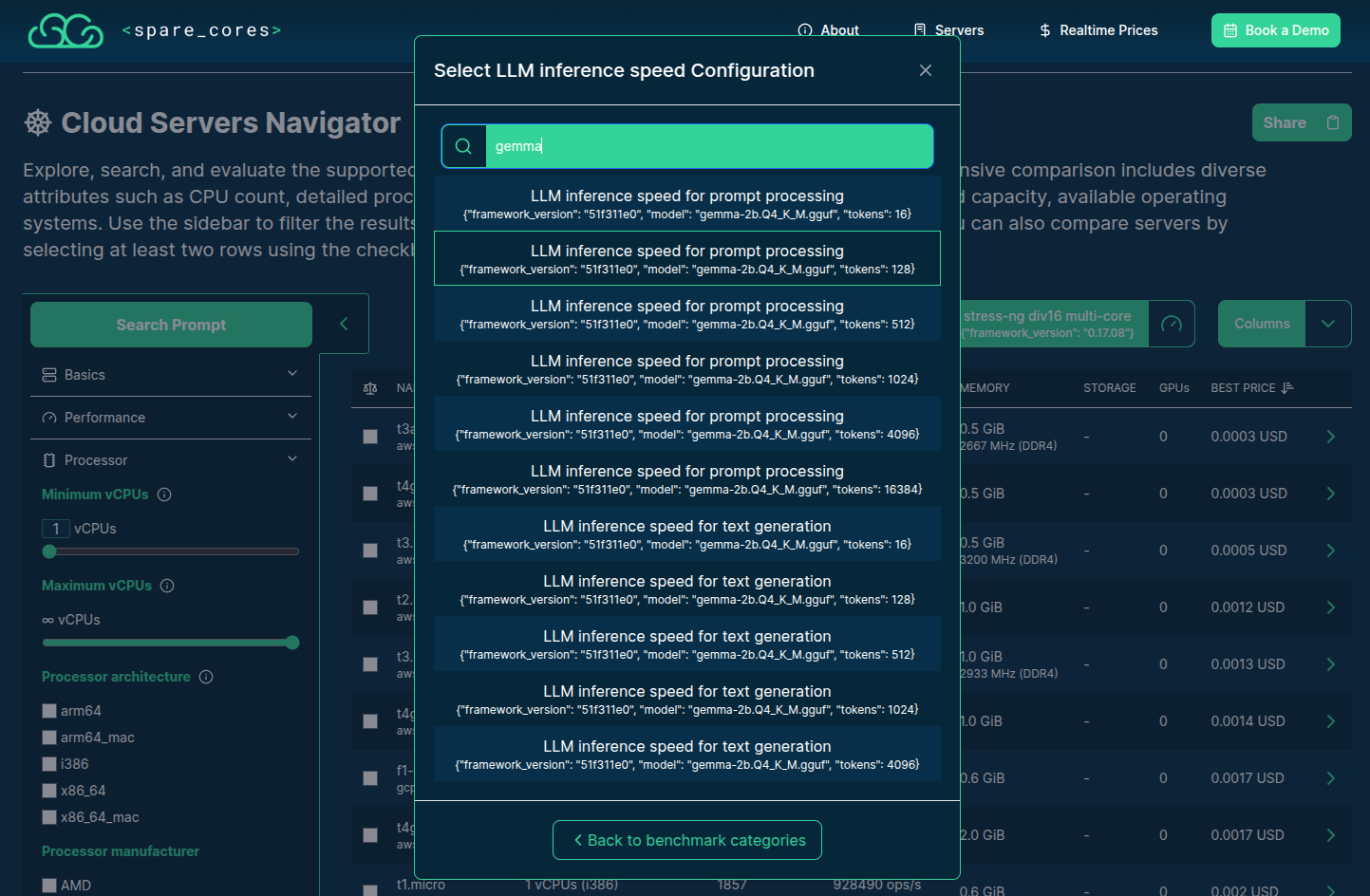

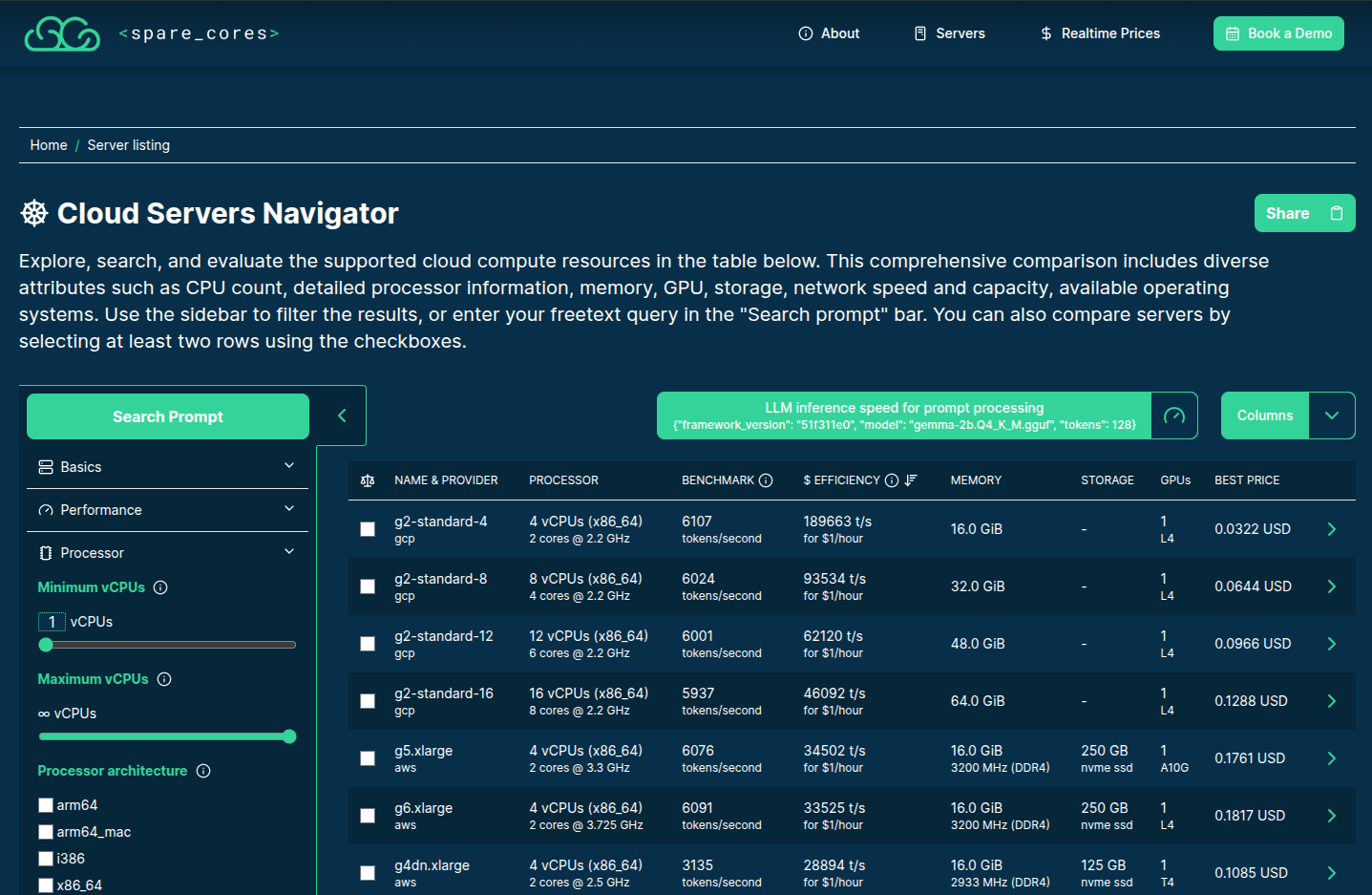

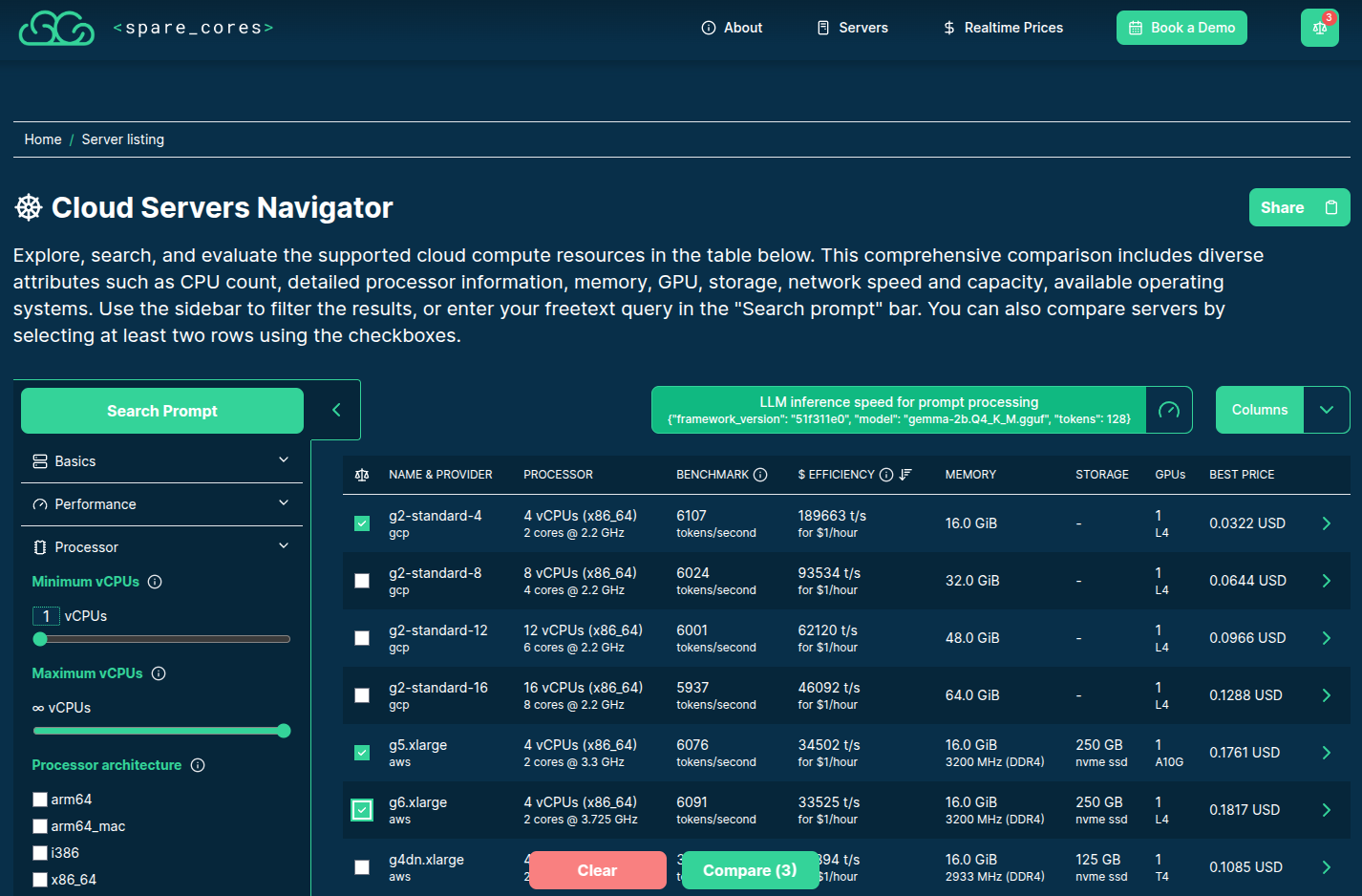

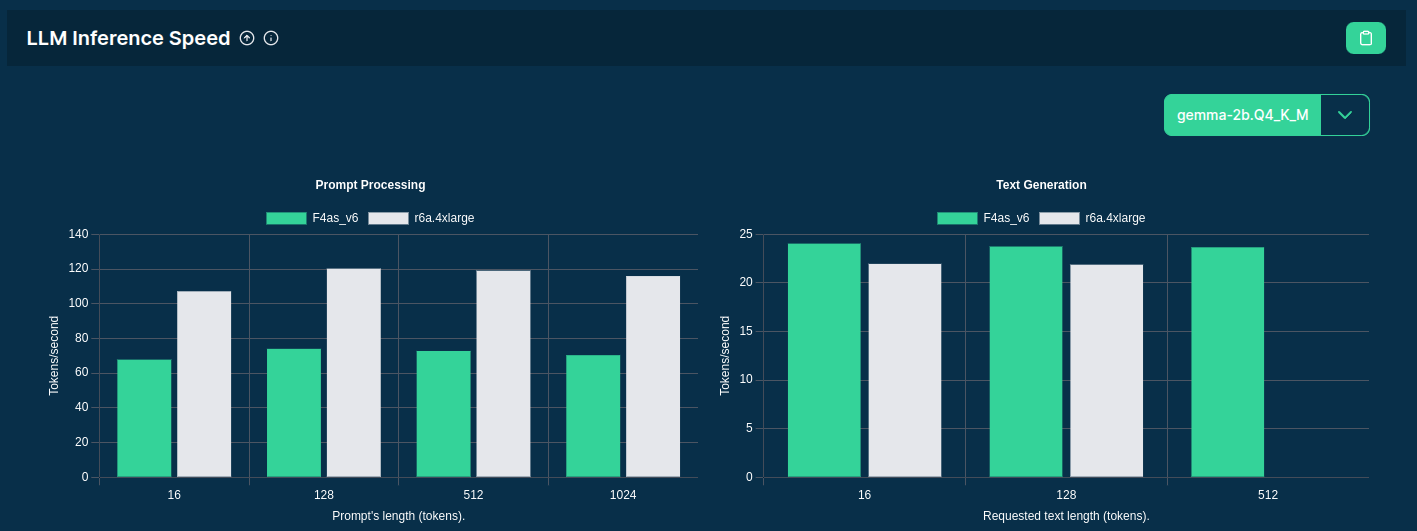

- Text generation: 16, 128, 512, 1k, 4k, 16k tokens

- Prompt processing: 16, 128, 512, 1k, 4k tokens

| Model | Parameters | File Size |

|---|---|---|

| SmolLM-135M.Q4_K_M.gguf | 135M | 100MB |

| qwen1_5-0_5b-chat-q4_k_m.gguf | 500M | 400MB |

| gemma-2b.Q4_K_M.gguf | 2B | 1.5GB |

| llama-7b.Q4_K_M.gguf | 7B | 4GB |

| phi-4-q4.gguf | 14B | 9GB |

| Llama-3.3-70B-Instruct-Q4_K_M.gguf | 70B | 42GB |

Expected tokens/second: 1/2/5/10/25/50/250/1k/4k

>>> from sc_inspector import llm

$ benchmark_config=$(jq -nc \

--arg version "51f311e0" \

--arg model "SmolLM-135M.Q4_K_M.gguf" \

--argjson tokens 128 \

'{framework_version: $version, model: $model, tokens: $tokens}')

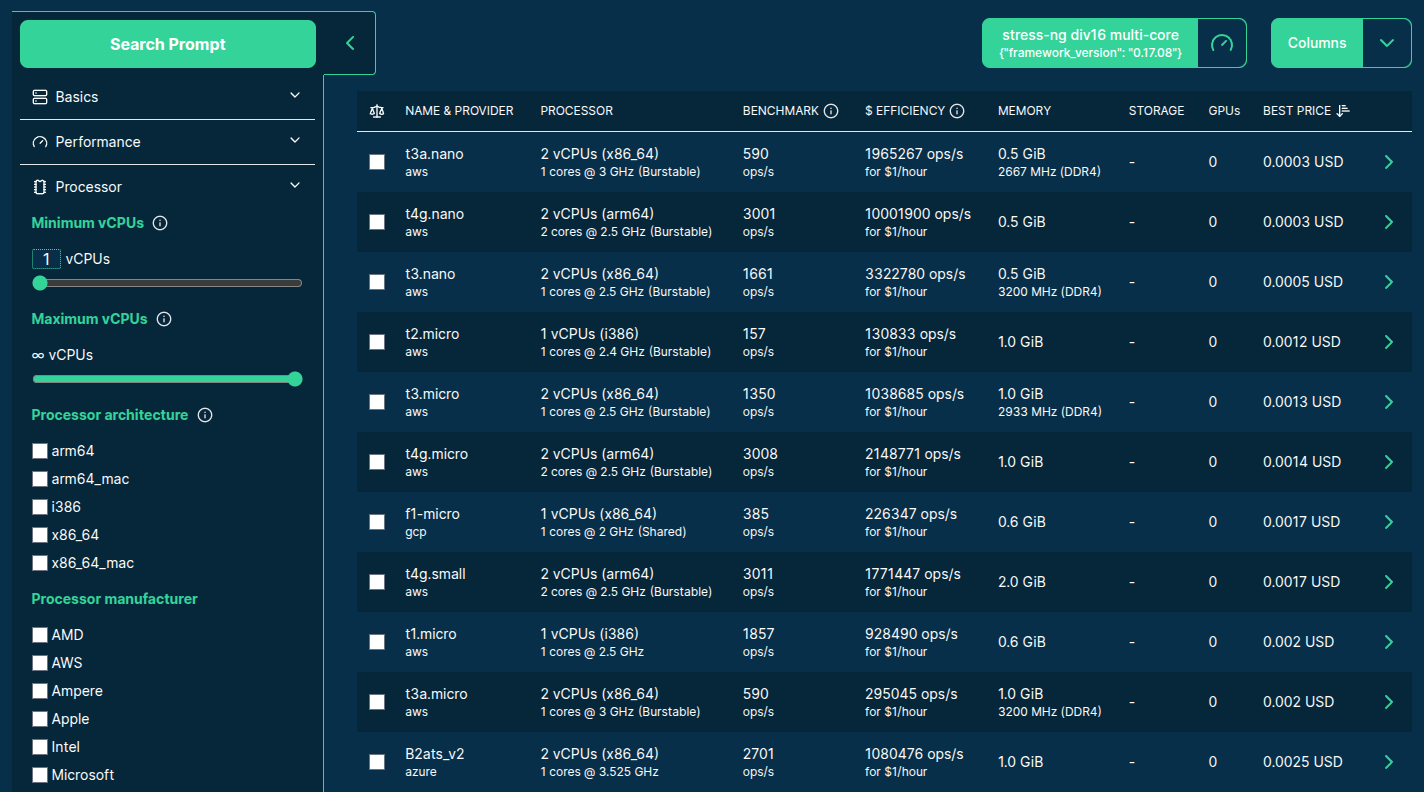

$ curl -s -D - "https://keeper.sparecores.net/servers" \

-G \

--data-urlencode "benchmark_score_min=1" \

--data-urlencode "add_total_count_header=true" \

--data-urlencode "limit=25" \

--data-urlencode "benchmark_config=$benchmark_config" \

--data-urlencode "benchmark_id=llm_speed:prompt_processing" \

-o /dev/null | grep -i x-total-count>>> from sc_inspector import llm

>>> from sc_inspector import llm

>>> from sc_inspector import llm

>>> from sc_inspector import llm

>>> from sc_inspector import llm

>>> from sc_inspector import llm

>>> input(“How much did it cost?!”)

| Vendor | Cost |

|---|---|

| AWS | 2153.68 USD |

| GCP | 696.9 USD |

| Azure | 8036.71 USD |

| Hetzner | 8.65 EUR |

| Upcloud | 170.21 EUR |

Overall: -

Thanks for the cloud credits! 🙇

>>> input(“Known limitations?”)

- Cannot scale to multiple GPUs with small models

- Needs CUDA for GPU-accelerated inference

- Only CPU is utilized in the AMD, Habana etc. servers

- Even some NVIDIA GPUs (e.g. T4G) are incompatible

More details: Spare Cores listing for GPU-accelerated instances

>>> input(“Best server for LLMs?”)

It depends …

>>> input(“Best server for LLMs?”)

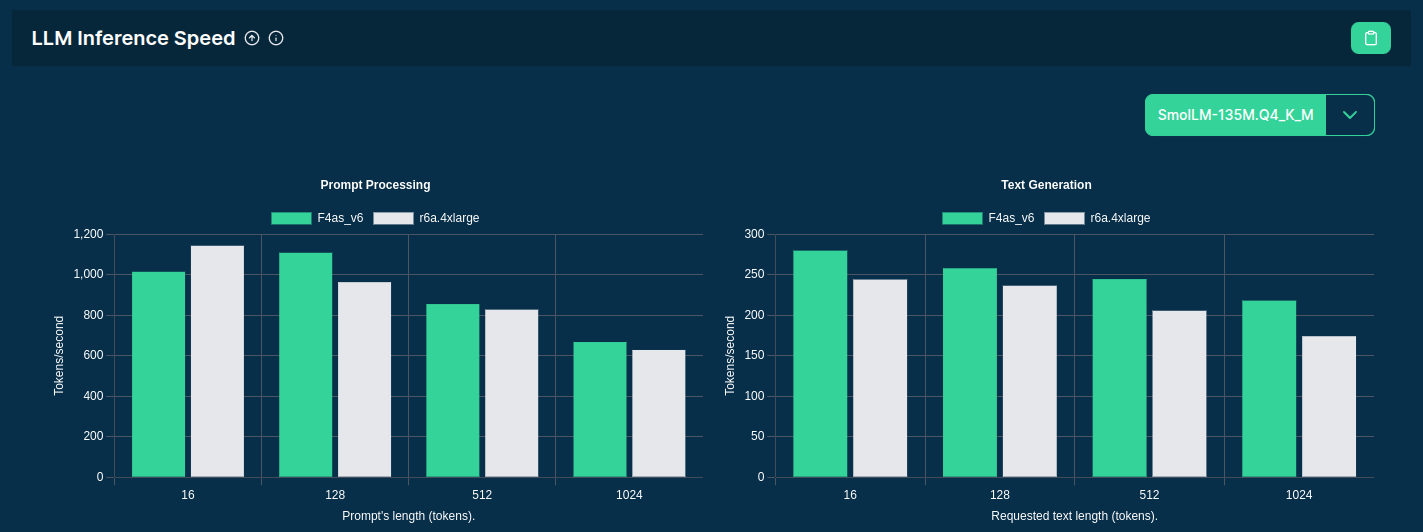

Source: F4AS_V6 vs r6a.4xlarge

>>> input(“Best server for LLMs?”)

Source: F4AS_V6 vs r6a.4xlarge

>>> from sparecores import team

@bra-fsn

@palabola

@daroczig

>>> from sparecores import team

@bra-fsn

Infrastructure and Python veteran.

@palabola

Guardian of the front-end and Node.js tools.

@daroczig

Hack of all trades, master of NaN.

Slides: sparecores.com/talks