dummy slide

Spare Cores introduction

and project updates

Gergely Daróczi

Jan 30, 2025

Slides: sparecores.com/talks

Press Space or click the green arrow icons to navigate the slides ->

>>> from spare_cores import why

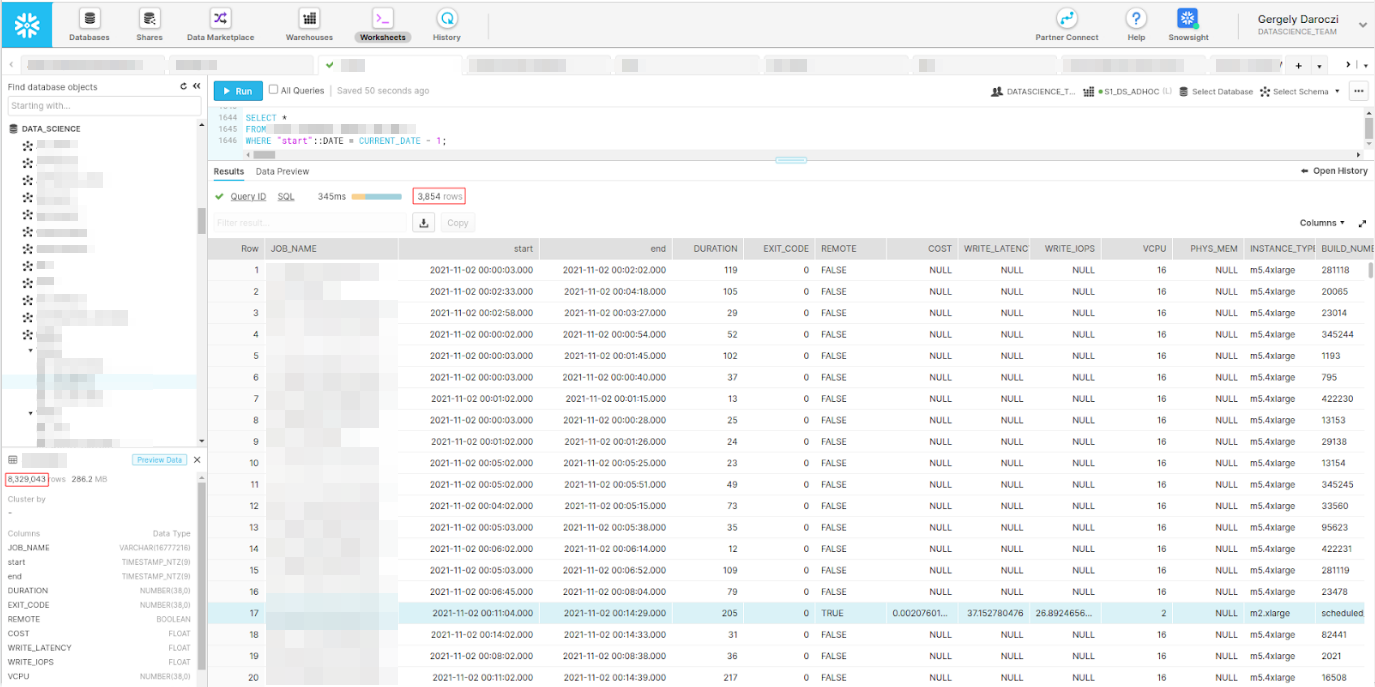

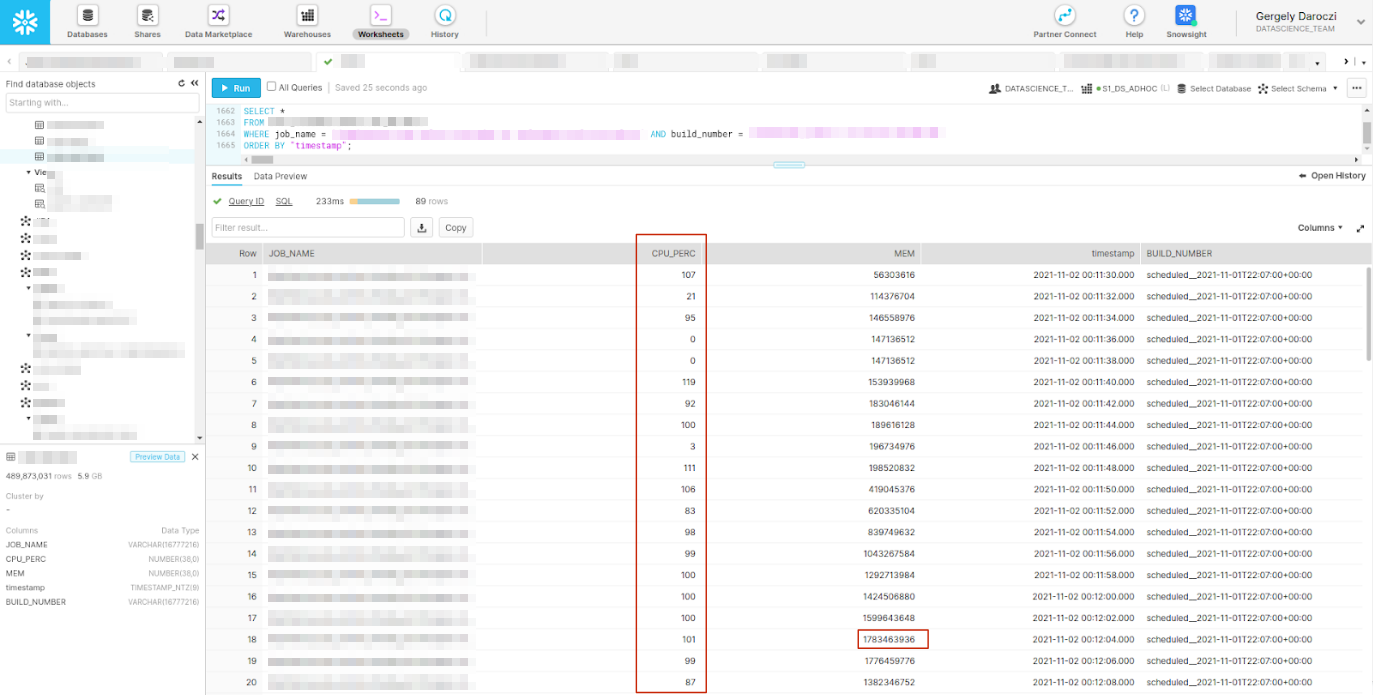

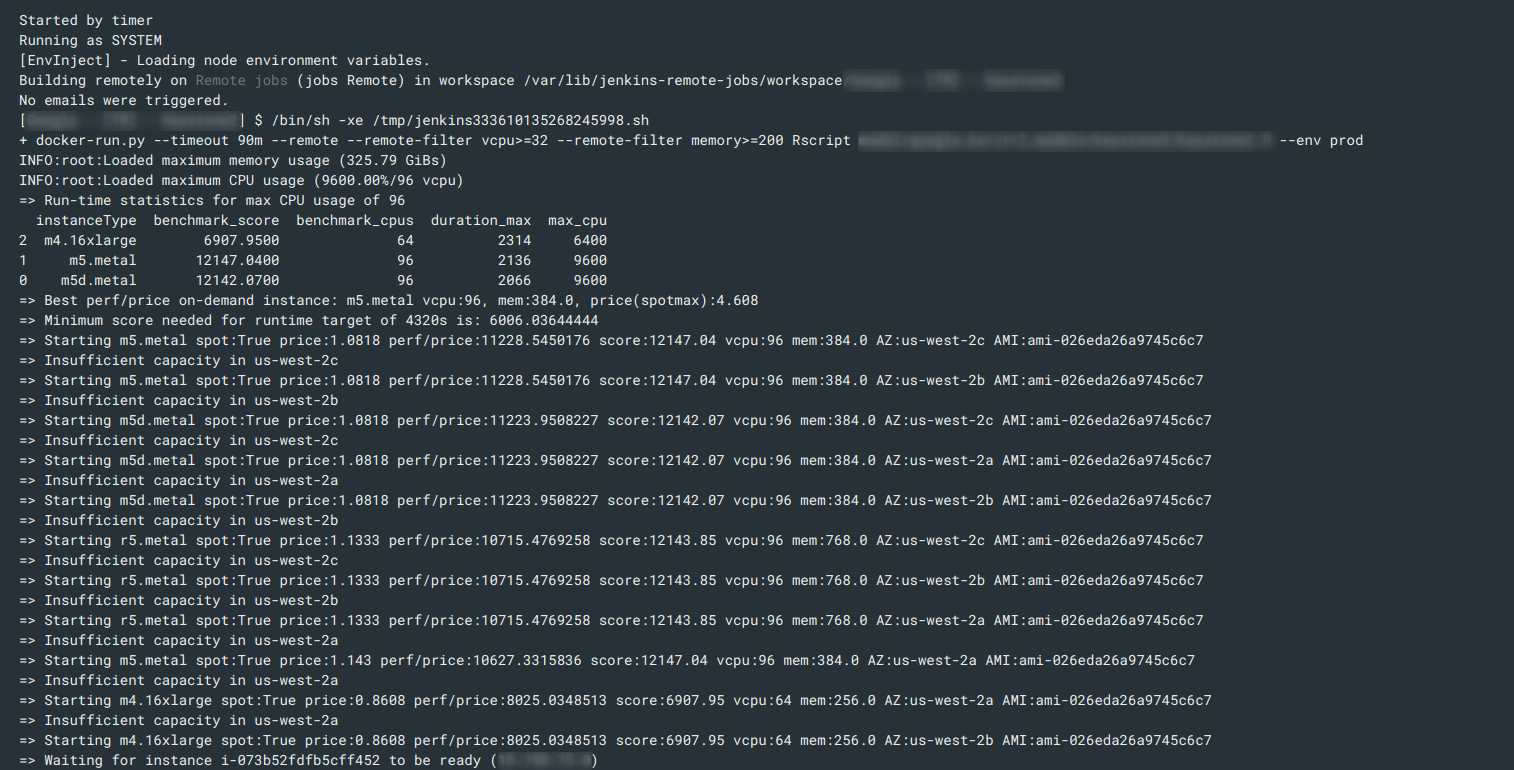

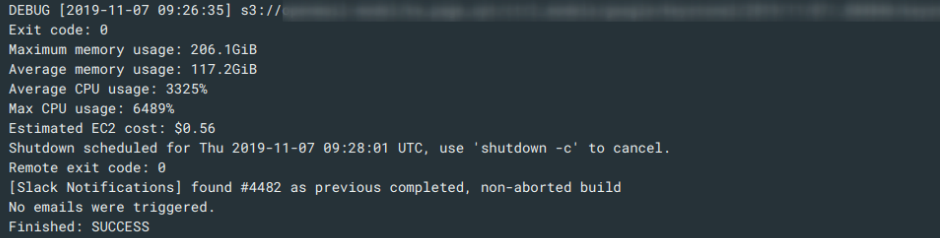

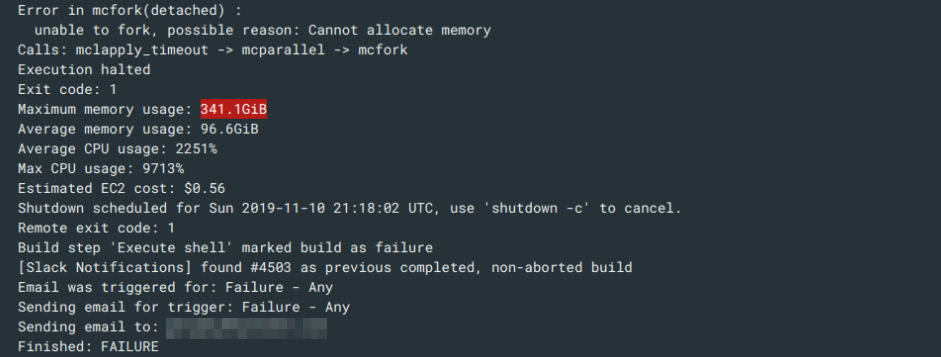

Data Science / Machine Learning batch jobs:

- run SQL

- run R or Python script

- train a simple model, reporting, API integrations etc.

- train hierarchical models/GBMs/neural nets etc.

>>> from spare_cores import why

Data Science / Machine Learning batch jobs:

- run SQL

- run R or Python script

- train a simple model, reporting, API integrations etc.

- train hierarchical models/GBMs/neural nets etc.

Scaling (DS) infrastructure.

>>> from spare_cores import why

>>> from spare_cores import why

AWS ECS

AWS Batch

Kubernetes

>>> from spare_cores import why

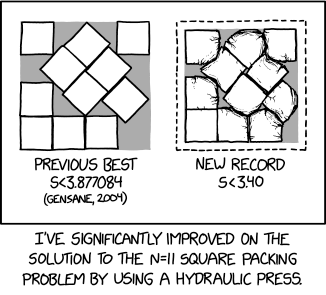

Source: xkcd

>>> from spare_cores import why

>>> from spare_cores import why

>>> from spare_cores import why

>>> from spare_cores import why

>>> from spare_cores import why

Other use-cases:

- stats/ML/AI model training,

- ETL pipelines,

- traditional CI/CD workflows for compiling and testing software,

- building Docker images,

- rendering images and videos,

- etc.

>>> from spare_cores import why

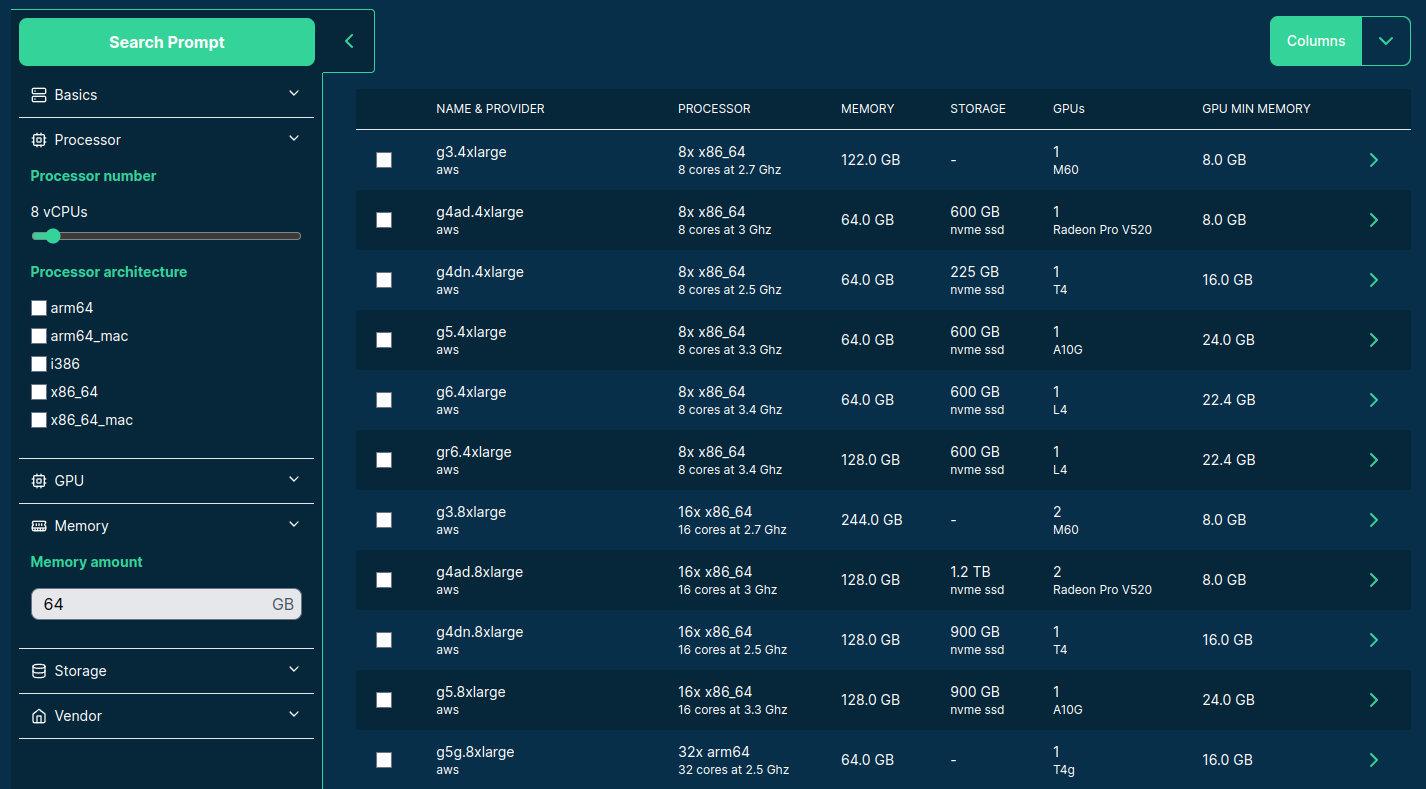

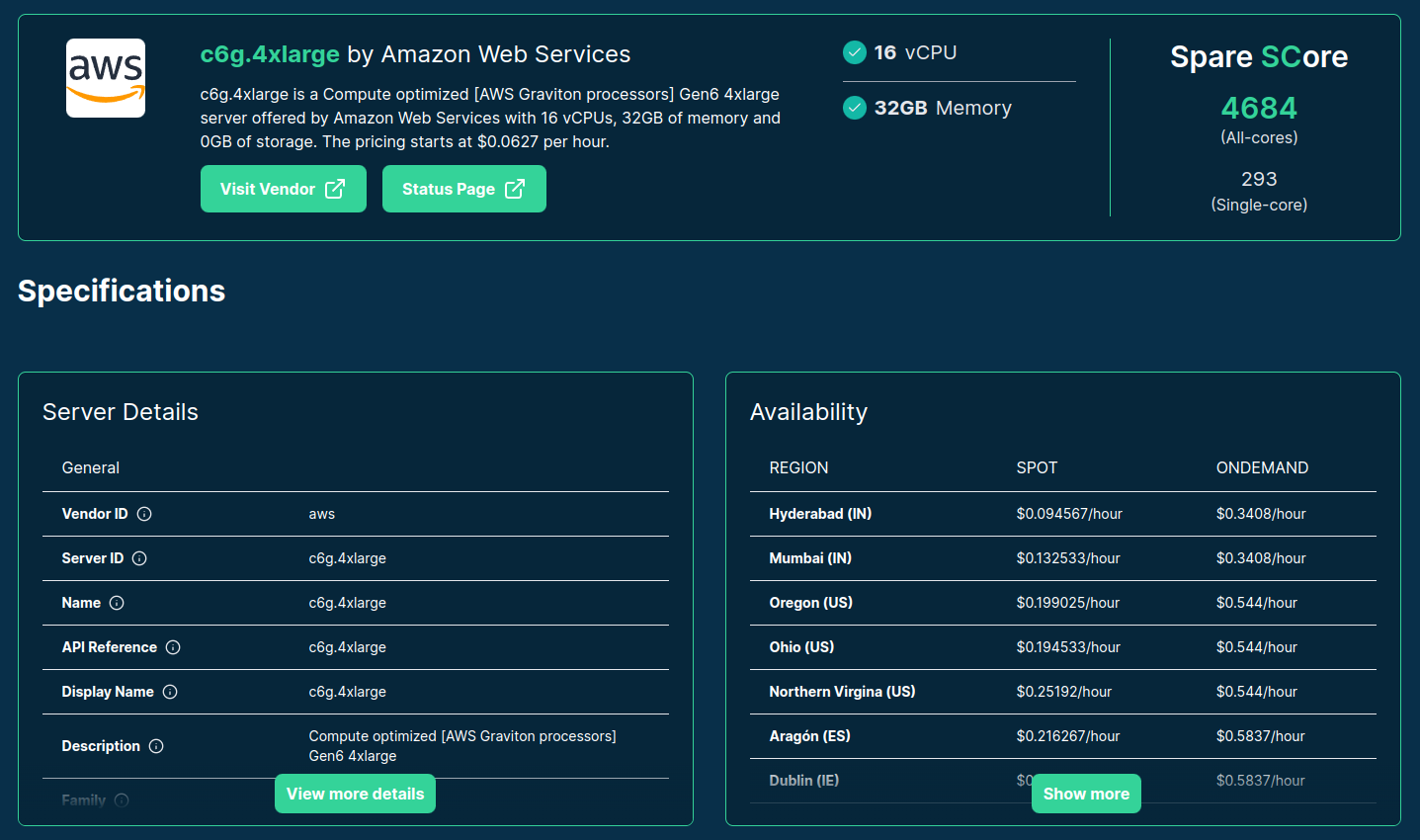

>>> from spare_cores import intro

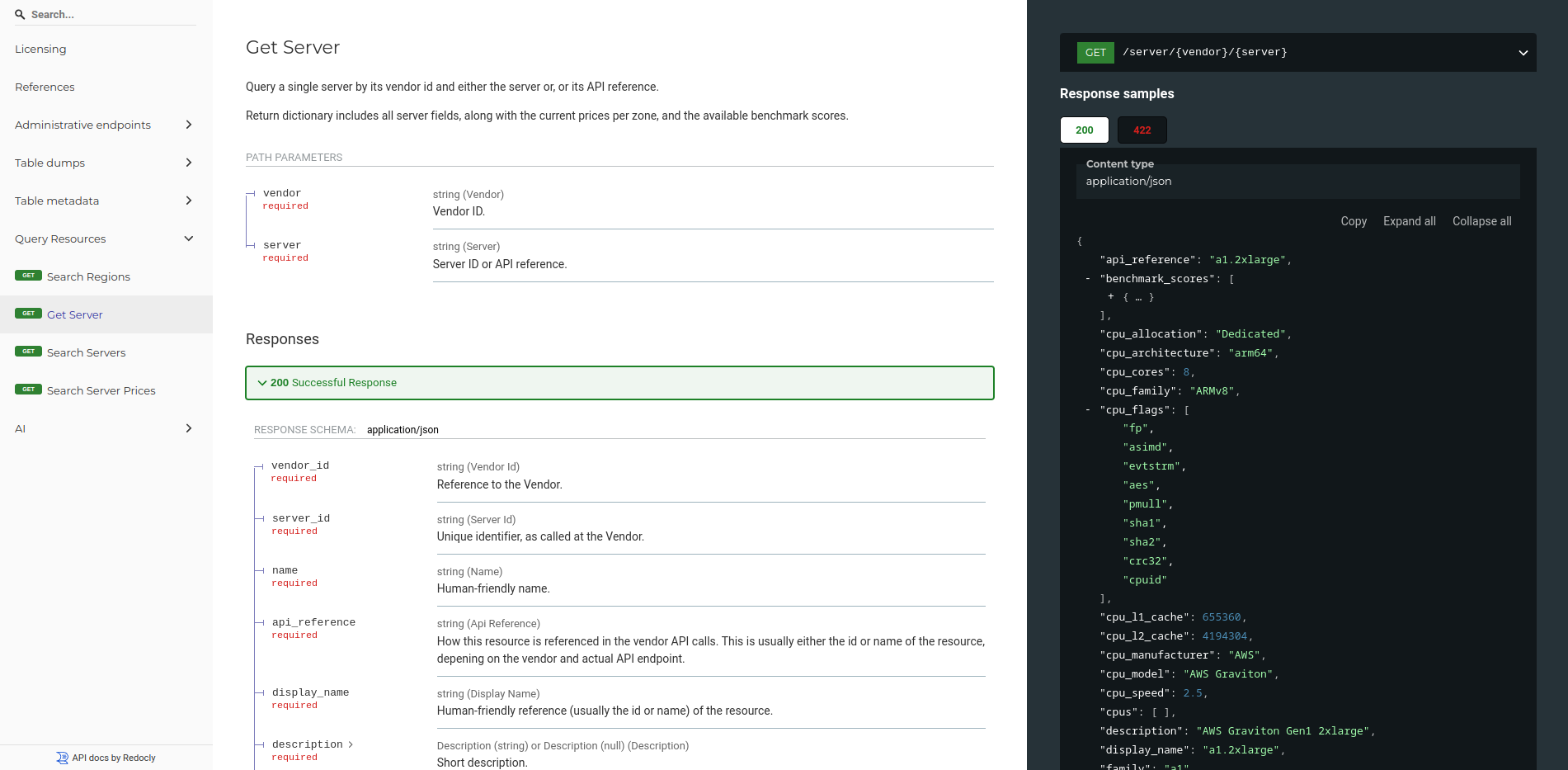

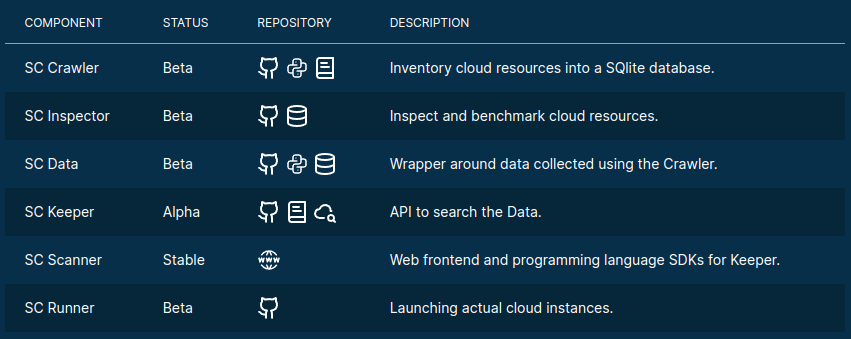

- Open-source tools, database schemas and documentation to inspect and inventory cloud vendors and their compute resource offerings.

- Managed infrastructure, databases, APIs, SDKs, and web applications to make these data sources publicly accessible.

- Helpers to start and manage instances in your own environment.

- SaaS to run containers in a managed environment without direct vendor engagement.

>>> from spare_cores import intro

Source: sparecores.com

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from spare_cores import intro

>>> from rich import print as pp

>>> from sc_crawler.tables import Server

>>> from sqlmodel import create_engine, Session, select

>>> engine = create_engine("sqlite:///sc-data-all.db")

>>> session = Session(engine)

>>> server = session.exec(select(Server).where(Server.server_id == 'g4dn.xlarge')).one()

>>> pp(server)

Server(

server_id='g4dn.xlarge',

vendor_id='aws',

display_name='g4dn.xlarge',

api_reference='g4dn.xlarge',

name='g4dn.xlarge',

family='g4dn',

description='Graphics intensive [Instance store volumes] [Network and EBS optimized] Gen4 xlarge',

status=<Status.ACTIVE: 'active'>,

observed_at=datetime.datetime(2024, 6, 6, 10, 18, 4, 127254),

hypervisor='nitro',

vcpus=4,

cpu_cores=2,

cpu_allocation=<CpuAllocation.DEDICATED: 'Dedicated'>,

cpu_manufacturer='Intel',

cpu_family='Xeon',

cpu_model='8259CL',

cpu_architecture=<CpuArchitecture.X86_64: 'x86_64'>,

cpu_speed=3.5,

cpu_l1_cache=None,

cpu_l2_cache=None,

cpu_l3_cache=None,

cpu_flags=[],

memory_amount=16384,

memory_generation=<DdrGeneration.DDR4: 'DDR4'>,

memory_speed=3200,

memory_ecc=None,

gpu_count=1,

gpu_memory_min=16384,

gpu_memory_total=16384,

gpu_manufacturer='Nvidia',

gpu_family='Turing',

gpu_model='Tesla T4',

gpus=[

{

'manufacturer': 'Nvidia',

'family': 'Turing',

'model': 'Tesla T4',

'memory': 15360,

'firmware_version': '535.171.04',

'bios_version': '90.04.96.00.A0',

'graphics_clock': 1590,

'sm_clock': 1590,

'mem_clock': 5001,

'video_clock': 1470

}

],

storage_size=125,

storage_type=<StorageType.NVME_SSD: 'nvme ssd'>,

storages=[{'size': 125, 'storage_type': 'nvme ssd'}],

network_speed=5.0,

inbound_traffic=0.0,

outbound_traffic=0.0,

ipv4=0,

)>>> spare_cores.__dir__()

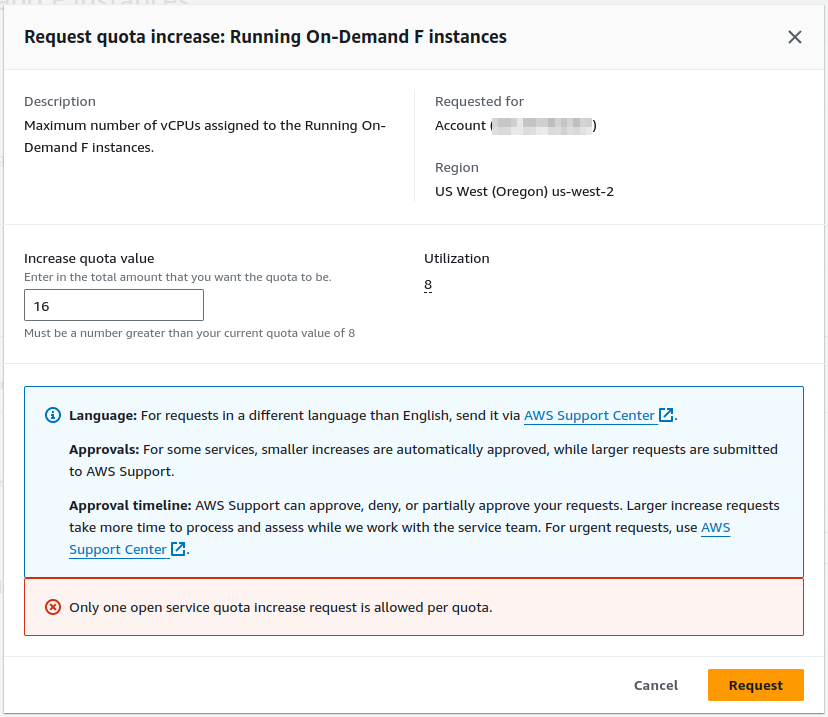

>>> spare_cores.challenges(“quotas”)

>>> spare_cores.challenges(“quotas”)

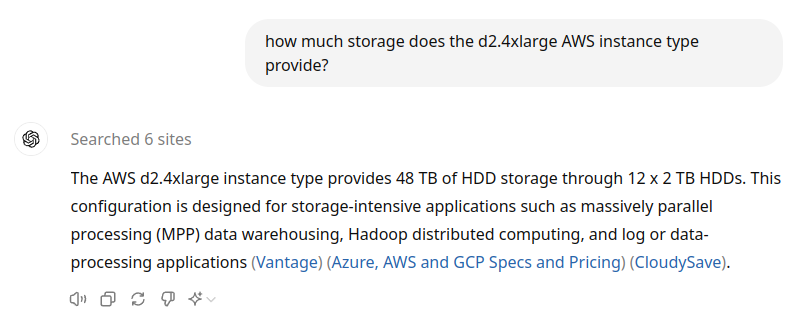

>>> spare_cores.challenges(“LLMs”)

>>> spare_cores.challenges(“LLMs”)

>>> spare_cores.challenges(“data”)

>>> from sc_crawler import pricing

- No way to find SKUs by filtering in the API call. Get all, search locally.

f1-microis one out of 2 instances with simple pricing.- For other instances, lookup SKUs for CPU + RAM and do the math.

- Match instance family with SKU via search in description,

e.g.

C2D.

- Except for

c2, which is called “Compute optimized”.

- And

m2is actually priced at a premium on the top ofm1.

- The

n1resource group is not CPU/RAM, butN1Standard, extract if it’s CPU or RAM price from description.

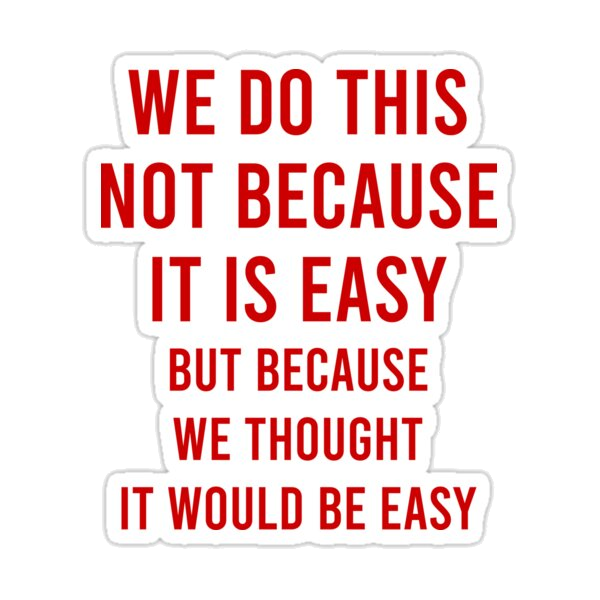

>>> import sc_data

Source: dbhub.io/sparecores

>>> changelogs.get(“spare_cores”)

- Featured by the Programatic Engineer’s The Pulse

- Top post on the AWS subreddit

- 6 conferences in 5 countries (2024)

- New vendor: UpCloud

- Web update: Embed any Spare Cores chart as Iframe

- Web update: Custom list of servers for comparison

- Web update: Order and filter by any benchmark score

- New benchmarks: PassMark

>>> changelogs.get(“spare_cores”)

New benchmarks: LLM inference (

ppandtgup to 32k tokens)- SmolLM-135M.Q4_K_M.gguf (135 M params; 100 MB)

- Qwen1.5-0.5B-Chat.gguf (0.5 B params; 400 MB)

- gemma-2b.Q4_K_M.gguf (2B params; 1.5 GB)

- LLaMA-7b.gguf (7B params; 4 GB)

- phi-4-q4.gguf (14 B params; 9 GB)

- Llama-3.3-70B-Instruct-Q4_K_M.gguf (70 B params; 42 GB)

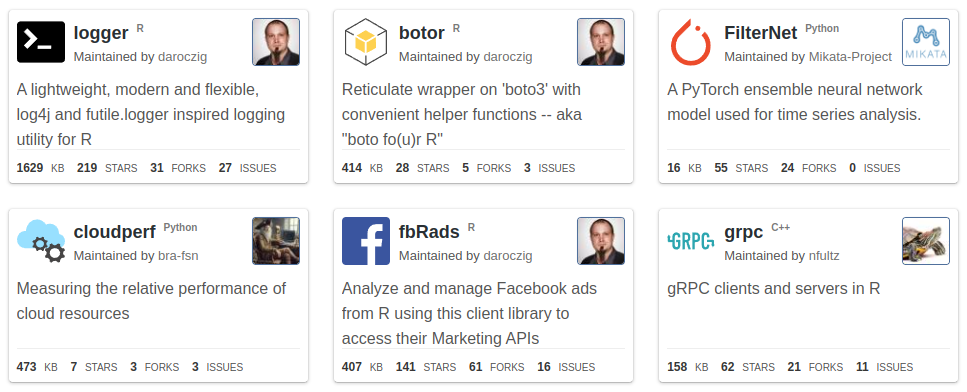

>>> from spare_cores import team

@bra-fsn

@palabola

@daroczig

>>> from spare_cores import team

@bra-fsn

Infrastructure and Python veteran.

@palabola

Guardian of the front-end and Node.js tools.

@daroczig

Hack of all trades, master of NaN.

>>> from spare_cores import support

Slides: sparecores.com/talks